I’ve recently used various kinds of message queues when building various apps that have needs to communicate with other parts of the app. For remote use, when the messaging parties are not on the same host, I’ve mostly used Amazon SQS, the Simple Queue Service.

When using the SQS ReceiveMessage API call you can select it to return just about immediately, with a message if there is one waiting, for receiving up to 10 messages per call. This is useful in some cases, but in my cases I tend to use long polling. It means that the ReceiveMessage call does not return immediately if there aren’t any messages in the queue, but it will wait for a message to arrive, for up to 20 seconds, after which it will return anyway. If a message becomes available, it will return as soon as it happens.

The long polling pattern makes it efficient to run message consumers and it reduces the number of API calls required when waiting for messages to arrive. In SQS the pricing is determined by the number of API calls. I don’t have a huge fleet of message producers or consumers, but polling the queues without long polling feature it would mean calling the API at least a few times per second per every consumer, to get a decent user experience, and that would easily mean tens or hundreds of millions of API calls every month. With long polling I can let the consumer issue the ReceiveMessage call, and if there aren’t any messages right now, it will just wait there until a message arrives (or the maximum wait time is reached), without extra API calls or losing the interactivity.

With my networking professional hat on, how does the SQS long polling work anyway? Let’s see!

I wrote a small Python app (receive.py) for receiving and showing messages from an SQS queue:

import logging

import boto3

AWS_REGION = "eu-north-1"

AWS_ACCOUNT = "123456789012"

QUEUE_NAME = "testqueue"

# Make the queue URL (or just copy from the queue settings)

queue_url = f"https://sqs.{AWS_REGION}.amazonaws.com/{AWS_ACCOUNT}/{QUEUE_NAME}"

logging.basicConfig(

level=logging.INFO,

format="%(asctime)s %(message)s",

datefmt="%H:%M:%S",

)

logger = logging.getLogger()

sqs = boto3.client("sqs", region_name=AWS_REGION)

logger.info("Started")

try:

while True:

response = sqs.receive_message(

QueueUrl=queue_url,

MaxNumberOfMessages=1,

WaitTimeSeconds=20,

VisibilityTimeout=30,

)

if "Messages" in response:

for msg in response["Messages"]:

# First "process" the message

logger.info(f"Message: {msg['Body']}")

# Then delete the message, otherwise it will return

# to the queue after VisibilityTimeout seconds

sqs.delete_message(

QueueUrl=queue_url,

ReceiptHandle=msg["ReceiptHandle"],

)

else:

logger.info("No messages available")

except KeyboardInterrupt:

print()

A few words about the app and setup. boto3 is the AWS-provided Python package that is available in the usual Python package repositories, or you can install python3-boto3 package on Debian Linux with APT.

You probably recognized that there is no authentication code in the app at all. That is because I ran this app on an EC2 instance in my VPC in AWS, and I had assigned the instance an IAM role that allows it to access all SQS queues within my AWS account. That way the EC2 instance is already authenticated and authorized to use my SQS queues. (Other ways for authentication include providing the IAM credentials in arguments of the boto3.client() call or in environment variables, let’s not go too deep on AWS IAM on this post.)

Back to the topic of long polling. It is the WaitTimeSeconds=20 argument that makes this example a long polling one.

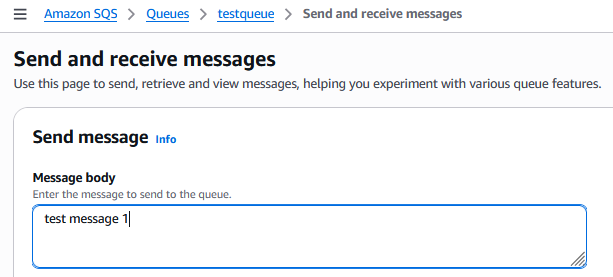

For sending test messages to the SQS queue I will just use the AWS console in the web browser:

This is what happens when I run the app, send a couple of messages from the console, stop the app, rerun it and send yet another message to the queue before finally stopping the app again:

(venv) markku@ip-172-30-0-234:~$ python receive.py

21:04:20 Found credentials from IAM Role: debian13-ServerRole-3LwmR8vmBf7E

21:04:20 Started

21:04:41 No messages available

21:05:01 No messages available

21:05:08 Message: test message 1

21:05:28 No messages available

21:05:32 Message: test message 2

21:05:52 No messages available

^C

(venv) markku@ip-172-30-0-234:~$ python receive.py

21:06:32 Found credentials from IAM Role: debian13-ServerRole-3LwmR8vmBf7E

21:06:32 Started

21:06:52 No messages available

21:07:01 Message: test message 3

21:07:21 No messages available

21:07:41 No messages available

^C

(venv) markku@ip-172-30-0-234:~$

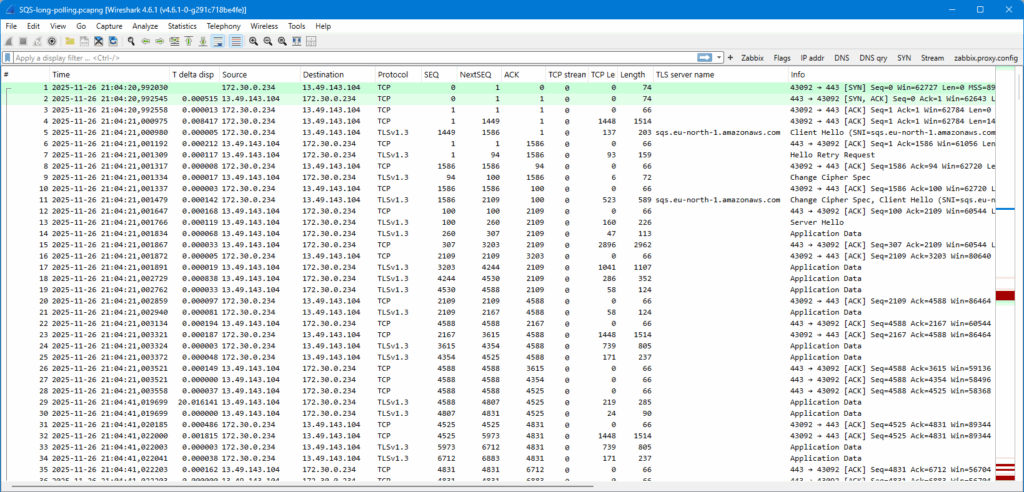

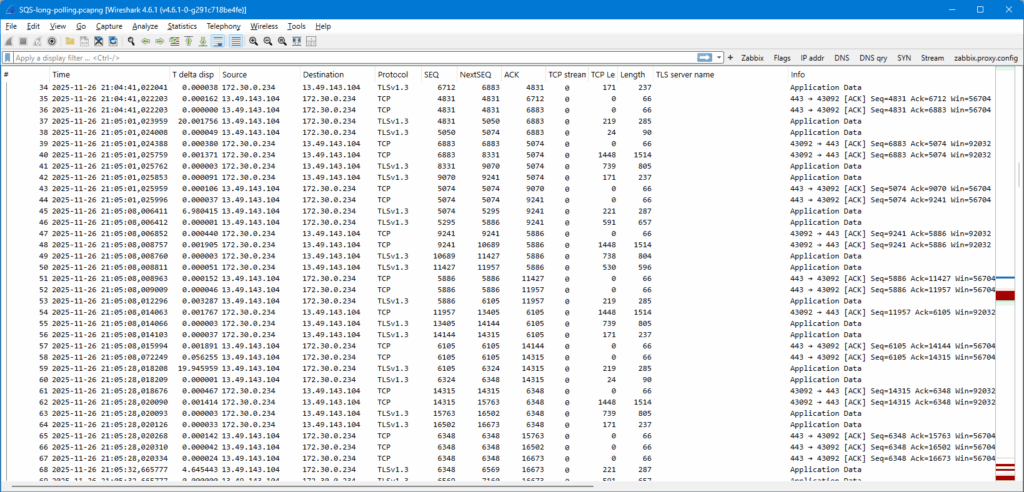

Let’s compare this output to the packet capture I took with Wireshark (using sshdump by the way: Wireshark connected to my EC2 instance with SSH and run tcpdump there, piping the data from the EC2 instance to Wireshark for saving and analysis).

SQS-long-polling.pcapng(github.com)

At 21:04:20 the SQS connection was initiated. The first 28 packets were exchanged right away, probably negotiating some important stuff. Note that the last packets 26-28 in that batch are just empty ACKs from the SQS queue endpoint, so the client was the last one sending any actual data.

Then there was silence for 20 seconds, and at 21:04:41 (packet 29) the SQS endpoint suddenly sent some data. At the same time the app logged “No messages available”, so that was what it got from the queue, just an empty response.

The client then sent data again, making a new long polling request. And the SQS endpoint again responded after 20 seconds at packet 37 at 21:05:01.

The same goes on, client sent data, but now the SQS endpoint returned already after ~7 seconds at packet 45 at 21:05:08. That’s when “test message 1” was received in the app.

And so on.

The TCP connection between the client and the SQS endpoint was kept established while the SQS queue waited for a message to be available in the queue, and when there was one, it was sent to the client immediately, otherwise the queue just told the client after 20 seconds that there was no message.

A client-side implementation detail is shown in the capture: The same TCP session was used for the SQS API calls until the app was stopped with Ctrl-C. After that (at 21:06:32) a new TCP session was opened and the rest of the calls used that. This is good and efficient if the app is making the long polls in a loop as there is no need to open a new TCP session for every SQS API call.

Conclusion

This long polling behavior is basic TCP after all. Once the data sent by one party is acknowledged by the receiving party, the TCP session can just be there waiting for any side to continue with data transfer. In this SQS case the client initiates the connection, but when the SQS queue endpoint did not have any data to respond right away, it just waited for the right moment.

In SQS long polling the maximum wait time is 20 seconds, but it could be even more. It has to be understood that any session-aware network middleboxes (like proxies, firewalls or load balancers) can get upset when there are long-lasting TCP sessions that don’t send any data, so having a silent TCP session is always a risk. I think this 20 seconds is very reliable compromise between frequent polling requests and risk of prematurely killed TCP sessions while waiting for messages to arrive.

I assume that other message queues (like Redis, RabbitMQ, whatever there are) work similarly. Let me know in the comments if you have further technical information about those.