The most usual way of making HTTP/HTTPS requests in Python applications is using the requests library. By default it doesn’t define any timeouts for the operations. It means that in specific circumstances a simple requests.get() call might not return at all. Depending on the nature of the application this is not desirable. This post demonstrates different ways to handle those situations.

But first, let’s see how it actually looks like when we don’t have timeout configured. I have two examples here (the statements have been pasted from a text editor so there is no user input latency in the time calculation), the first one:

>>> import time

>>> import requests

>>> start_time = time.monotonic()

>>> try:

... r = requests.get("http://192.168.7.11/")

... except Exception as e:

... print(type(e))

... print(e)

...

<class 'requests.exceptions.ConnectionError'>

HTTPConnectionPool(host='192.168.7.11', port=80): Max retries exceeded with url: / (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7f2bd655eb20>: Failed to establish a new connection: [Errno 113] No route to host'))

>>> stop_time = time.monotonic()

>>> print(round(stop_time-start_time, 2), "seconds")

3.08 seconds

>>>

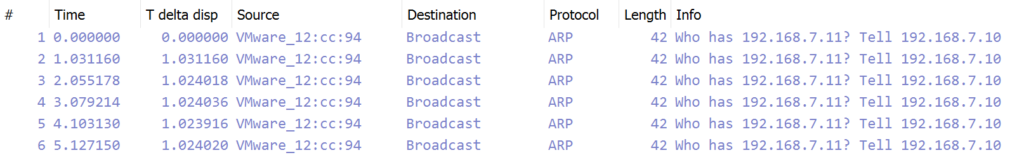

Captured packets for the first attempt:

Another:

>>> start_time = time.monotonic()

>>> try:

... r = requests.get("http://192.168.8.1/")

... except Exception as e:

... print(type(e))

... print(e)

...

<class 'requests.exceptions.ConnectionError'>

HTTPConnectionPool(host='192.168.8.1', port=80): Max retries exceeded with url: / (Caused by NewConnectionError('<urllib3.connection.HTTPConnection object at 0x7f2bd65a48e0>: Failed to establish a new connection: [Errno 110] Connection timed out'))

>>> stop_time = time.monotonic()

>>> print(round(stop_time-start_time, 2), "seconds")

130.41 seconds

>>>

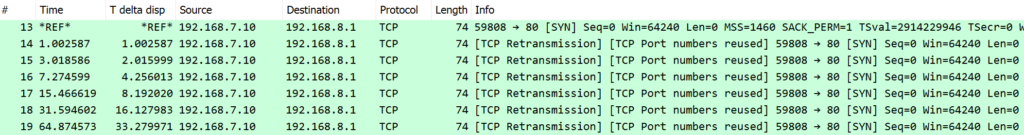

Captured packets for the second attempt:

As we can see, without any timeout defined in the requests.get() call there definitely was timeout involved.

Note: The test host here was a Debian 11 Bullseye server, with a Linux kernel from 5.10 series. With another operating system/kernel the results can/will be different.

What actually happened is that requests used services from urllib3, which used services from http.client, which in turn used services from socket. And it was the socket.connect() call that down there returned an error when error/nothing happened with the connection attempts.

The first example was about connection attempt to a host in the local subnet but the destination host did not exist, thus no ARP response. The socket call timed out already in three seconds (even though the host networking stack continued attempting the ARP requests for another two seconds).

The second example was a remote destination that was not responding because the destination IP was incorrect or firewall blocked the requests, or some other error happened that prevented any response. In this case the TCP stack did its usual attempts with exponentially increased delay between the attempts (1, 2, 4, 8, 16 and ~32 seconds). (Btw: No ARP request/response was shown because the host already had the local router MAC address in the cache.) And finally the stack gave up at about 130 seconds of total waiting. I also run this same scenario on a Debian 10 Buster server (with kernel 4.19) and there the timeout happened at about 30 seconds.

Let’s try adjusting the timeout now with the basic timeout argument in requests.get():

>>> start_time = time.monotonic()

>>> try:

... r = requests.get("http://192.168.7.11/", timeout=2)

... except Exception as e:

... print(type(e))

... print(e)

...

<class 'requests.exceptions.ConnectTimeout'>

HTTPConnectionPool(host='192.168.7.11', port=80): Max retries exceeded with url: / (Caused by ConnectTimeoutError(<urllib3.connection.HTTPConnection object at 0x7f2bd656b070>, 'Connection to 192.168.7.11 timed out. (connect timeout=2)'))

>>> stop_time = time.monotonic()

>>> print(round(stop_time-start_time, 2), "seconds")

2.01 seconds

>>>

And with the remote destination:

>>> start_time = time.monotonic()

>>> try:

... r = requests.get("http://192.168.8.1/", timeout=2)

... except Exception as e:

... print(type(e))

... print(e)

...

<class 'requests.exceptions.ConnectTimeout'>

HTTPConnectionPool(host='192.168.8.1', port=80): Max retries exceeded with url: / (Caused by ConnectTimeoutError(<urllib3.connection.HTTPConnection object at 0x7f2bd6556d30>, 'Connection to 192.168.8.1 timed out. (connect timeout=2)'))

>>> stop_time = time.monotonic()

>>> print(round(stop_time-start_time, 2), "seconds")

2.0 seconds

>>>

In both the local subnet and remote cases the raised exception was changed from requests.ConnectionError to requests.ConnectTimeout, and the timeout happened in two seconds, just like expected.

Actually the timeout argument is a bit more complex: you can specify a tuple of two values. The first value is the connect timeout and the second is the read timeout. Read the requests advanced documentation for more about that detail.

Extra option: Whenever using the timeout argument, instead of using the int/float values you can use a urllib3.Timeout instance, like this:

>>> from urllib3 import Timeout

>>> start_time = time.monotonic()

>>> try:

... r = requests.get("http://192.168.8.1/", timeout=Timeout(connect=2, read=60))

... except Exception as e:

... print(type(e))

... print(e)

...

<class 'requests.exceptions.ConnectTimeout'>

HTTPConnectionPool(host='192.168.8.1', port=80): Max retries exceeded with url: / (Caused by ConnectTimeoutError(<urllib3.connection.HTTPConnection object at 0x7f2bd6556910>, 'Connection to 192.168.8.1 timed out. (connect timeout=2)'))

>>> stop_time = time.monotonic()

>>> print(round(stop_time-start_time, 2), "seconds")

2.01 seconds

>>>

I don’t know if there are other reasons for using the Timeout object instead of tuple of values than to make the configuration more visual: timeout=Timeout(connect=x, read=y) instead of timeout=(x, y).

Ok, now we know how to specify the timeout for separate requests calls. Now it’s just all about remembering to add it to every call separately… maybe not. Instead, we can use sessions and transport adapters to set a timeout for all calls:

>>> from requests.adapters import HTTPAdapter

>>> class TimeoutHTTPAdapter(HTTPAdapter):

... def __init__(self, *args, **kwargs):

... if "timeout" in kwargs:

... self.timeout = kwargs["timeout"]

... del kwargs["timeout"]

... else:

... self.timeout = 5 # or whatever default you want

... super().__init__(*args, **kwargs)

... def send(self, request, **kwargs):

... kwargs["timeout"] = self.timeout

... return super().send(request, **kwargs)

...

>>> session = requests.Session()

>>> adapter = TimeoutHTTPAdapter(timeout=(1,3))

>>> session.mount("http://", adapter)

>>> session.mount("https://", adapter)

>>> start_time = time.monotonic()

>>> try:

... r = session.get("http://192.168.8.1/")

... except Exception as e:

... print(type(e))

... print(e)

...

<class 'requests.exceptions.ConnectTimeout'>

HTTPConnectionPool(host='192.168.8.1', port=80): Max retries exceeded with url: / (Caused by ConnectTimeoutError(<urllib3.connection.HTTPConnection object at 0x7f2bd655ebe0>, 'Connection to 192.168.8.1 timed out. (connect timeout=1)'))

>>> stop_time = time.monotonic()

>>> print(round(stop_time-start_time, 2), "seconds")

1.01 seconds

>>>

The instance of the custom TimeoutHTTPAdapter class was mounted to all http:// and https:// URLs with the session object, and then the GET request was specifically executed via the session object (session.get()), not via the requests.get() global function.

What the TimeoutHTTPAdapter class actually does is it overrides any call-specific timeout arguments with the instance-defined timeout attribute when sending requests. Here the timeout=4 argument is effectively ignored because of that:

>>> try:

... r = session.get("http://192.168.8.1/", timeout=4)

... except Exception as e:

... print(type(e))

... print(e)

...

<class 'requests.exceptions.ConnectTimeout'>

HTTPConnectionPool(host='192.168.8.1', port=80): Max retries exceeded with url: / (Caused by ConnectTimeoutError(<urllib3.connection.HTTPConnection object at 0x7f2bd650bf40>, 'Connection to 192.168.8.1 timed out. (connect timeout=1)'))

>>>

If you will, you can edit the TimeoutHTTPAdapter.send() method to support call-level overrides:

>>> class TimeoutHTTPAdapter(HTTPAdapter):

... def __init__(self, *args, **kwargs):

... if "timeout" in kwargs:

... self.timeout = kwargs["timeout"]

... del kwargs["timeout"]

... else:

... self.timeout = 5 # or whatever default you want

... super().__init__(*args, **kwargs)

... def send(self, request, **kwargs):

... if kwargs["timeout"] is None:

... kwargs["timeout"] = self.timeout

... return super().send(request, **kwargs)

...

>>> session = requests.Session()

>>> adapter = TimeoutHTTPAdapter(timeout=(1,3))

>>> session.mount("http://", adapter)

>>> session.mount("https://", adapter)

>>> start_time = time.monotonic()

>>> try:

... r = session.get("http://192.168.8.1/", timeout=4)

... except Exception as e:

... print(type(e))

... print(e)

...

<class 'requests.exceptions.ConnectTimeout'>

HTTPConnectionPool(host='192.168.8.1', port=80): Max retries exceeded with url: / (Caused by ConnectTimeoutError(<urllib3.connection.HTTPConnection object at 0x7f2bd64f5bb0>, 'Connection to 192.168.8.1 timed out. (connect timeout=4)'))

>>> stop_time = time.monotonic()

>>> print(round(stop_time-start_time, 2), "seconds")

4.01 seconds

>>>

In this case the session-level timeout value is used in send() only when the call-level timeout is None (which it is by default).

Conclusion

Use a custom requests.adapters.HTTPAdapter class (like TimeoutHTTPAdapter in this post) to specify your application-level timeout values.

In the next post we will see request retries in action.