Let’s do some Docker networking with Zabbix proxies. The presented Docker configurations apply to other similar client-server applications as well, so you may find this useful even if you don’t use Zabbix.

On this longish page:

- Preparations

- Case 1: One Zabbix proxy, with the default bridge network

- Case 2: Two Zabbix proxies, with the default bridge network

- Case 3: Zabbix proxy with a macvlan network

- Case 4: Zabbix proxy with an IPvlan network

- Final notes

Preparations

I’m running the Docker service on Debian Linux 12 on a virtual machine, with no special configurations. These are the default networks:

markku@docker:~$ docker --version

Docker version 28.2.2, build e6534b4

markku@docker:~$ docker network ls

NETWORK ID NAME DRIVER SCOPE

714b8349c1fc bridge bridge local

83b1f60b4423 host host local

4a3a4b50fa94 none null local

markku@docker:~$

The bridge network is configured with the Docker-default 172.17.0.0/16 subnet:

markku@docker:~$ docker network inspect bridge | jq '.[0] | { bridge_name: .Options["com.docker.network.bridge.name"], subnet: .IPAM.Config[0].Subnet, gateway: .IPAM.Config[0].Gateway }'

{

"bridge_name": "docker0",

"subnet": "172.17.0.0/16",

"gateway": "172.17.0.1"

}

markku@docker:~$ ip addr show dev docker0

6: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN group default

link/ether 5e:69:30:f1:56:bd brd ff:ff:ff:ff:ff:ff

inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0

valid_lft forever preferred_lft forever

markku@docker:~$

I’m using Zabbix 7.0 (the currently latest LTS version) components in this demo. The official Zabbix container installation instructions are at https://www.zabbix.com/documentation/7.0/en/manual/installation/containers. I’ll be using the SQLite3 variant of Zabbix proxy, so https://hub.docker.com/r/zabbix/zabbix-proxy-sqlite3/ is the page for it in Docker Hub, and from there I will use the latest 7.0 version running on Alpine Linux. I’ll pull the image here first:

markku@docker:~$ docker pull zabbix/zabbix-proxy-sqlite3:alpine-7.0-latest

alpine-7.0-latest: Pulling from zabbix/zabbix-proxy-sqlite3

fe07684b16b8: Pull complete

f24c2081afc3: Pull complete

a17de01214e7: Pull complete

005744d8bec0: Pull complete

e4e57cee2e94: Pull complete

4f4fb700ef54: Pull complete

8e9c4cd821f6: Pull complete

Digest: sha256:1e4761d080dda51d05c1c4269d123c65ed0bdbb1a0761ce22cdd62b067619c5b

Status: Downloaded newer image for zabbix/zabbix-proxy-sqlite3:alpine-7.0-latest

docker.io/zabbix/zabbix-proxy-sqlite3:alpine-7.0-latest

markku@docker:~$ docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

zabbix/zabbix-proxy-sqlite3 alpine-7.0-latest 28166d384a55 12 days ago 59.1MB

markku@docker:~$

Let’s start with the first case.

Case 1: One Zabbix proxy, with the default bridge network

I’ll get the Zabbix proxy container running right away:

markku@docker:~$ docker run --name Proxy1 --detach -v proxy_databases:/var/lib/zabbix/db_data -v /etc/localtime:/etc/localtime:ro -e ZBX_HOSTNAME=Proxy1 -e ZBX_SERVER_HOST=192.168.7.80 --restart unless-stopped -p 10051:10051 zabbix/zabbix-proxy-sqlite3:alpine-7.0-latest

4e4ad9ed24b1f4ddcad05e8a1826f5d4369a8439bab1ecb756686f2e3485a297

markku@docker:~$

That’s a long command, so let’s see what the options do:

--name Proxy1defines the container name--detachmeans that the container will run in the background-v proxy_databases:/var/lib/zabbix/db_datacreates a volume “proxy_databases” on the Docker host, and it will be mounted as/var/lib/zabbix/db_datain the container, to be used for the SQLite3 database (see https://hub.docker.com/r/zabbix/zabbix-proxy-sqlite3)-v /etc/localtime:/etc/localtime:romounts the hosts local time information as read-only, effectively setting the timezone on the container to the same as on the host- The two

-eoptions define the Zabbix proxy configurations: instead of azabbix_proxy.conffile the configurations are set with the environment variables (see https://hub.docker.com/r/zabbix/zabbix-proxy-sqlite3) --restart unless-stoppeddefines that the container is to be started whenever the Docker service is started-p 10051:10051maps the TCP port 10051 on the host to the container port 10051, effectively making it possible for Zabbix active agents to connect to the proxy container.

The Zabbix proxy sends its logs to the standard output for the Docker host to save it, so let’s see the outputs right away:

markku@docker:~$ docker logs Proxy1

Preparing Zabbix proxy

** Preparing Zabbix proxy configuration file

** Updating '/etc/zabbix/zabbix_proxy.conf' parameter "ProxyMode": ''...removed

** Updating '/etc/zabbix/zabbix_proxy.conf' parameter "Server": '192.168.7.80'...updated

** Updating '/etc/zabbix/zabbix_proxy.conf' parameter "Hostname": 'Proxy1'...updated

** Updating '/etc/zabbix/zabbix_proxy.conf' parameter "HostnameItem": ''...removed

** Updating '/etc/zabbix/zabbix_proxy.conf' parameter "ListenIP": ''...removed

...

Starting Zabbix Proxy (active) [Proxy1]. Zabbix 7.0.13 (revision f2c8021).

Press Ctrl+C to exit.

1:20250615:164249.496 Starting Zabbix Proxy (active) [Proxy1]. Zabbix 7.0.13 (revision f2c8021).

1:20250615:164249.496 **** Enabled features ****

1:20250615:164249.496 SNMP monitoring: YES

1:20250615:164249.496 IPMI monitoring: YES

1:20250615:164249.496 Web monitoring: YES

1:20250615:164249.496 VMware monitoring: YES

1:20250615:164249.496 ODBC: YES

1:20250615:164249.496 SSH support: YES

1:20250615:164249.496 IPv6 support: YES

1:20250615:164249.496 TLS support: YES

1:20250615:164249.496 **************************

1:20250615:164249.496 using configuration file: /etc/zabbix/zabbix_proxy.conf

1:20250615:164249.496 cannot open database file "/var/lib/zabbix/db_data/Proxy1.sqlite": [2] No such file or directory

1:20250615:164249.496 creating database ...

1:20250615:164251.735 current database version (mandatory/optional): 07000000/07000019

1:20250615:164251.735 required mandatory version: 07000000

1:20250615:164251.736 proxy #0 started [main process]

166:20250615:164251.737 proxy #1 started [configuration syncer #1]

167:20250615:164251.750 proxy #2 started [trapper #1]

...

166:20250615:164251.751 cannot obtain configuration data from server at "192.168.7.80": empty string received

173:20250615:164251.864 cannot send proxy data to server at "192.168.7.80": proxy "Proxy1" not found

173:20250615:164252.867 cannot send proxy data to server at "192.168.7.80": proxy "Proxy1" not found

173:20250615:164253.869 cannot send proxy data to server at "192.168.7.80": proxy "Proxy1" not found

...

I removed lots of output, but we can see that the Zabbix proxy process was started with the supplied configuration, and it created the database file as /var/lib/zabbix/db_data/Proxy1.sqlite, but it could not communicate with the Zabbix server. Well, that’s because I didn’t create the proxy there yet, so the server refuses to do anything with the proxy.

I’ll create the proxy “Proxy1” in Zabbix server in the UI (in Administration – Proxies), and then see the container logs again with “docker logs Proxy1” command:

...

173:20250615:164419.074 cannot send proxy data to server at "192.168.7.80": proxy "Proxy1" not found

173:20250615:164420.077 cannot send proxy data to server at "192.168.7.80": proxy "Proxy1" not found

166:20250615:164421.848 received configuration data from server at "192.168.7.80", datalen 5474

markku@docker:~$

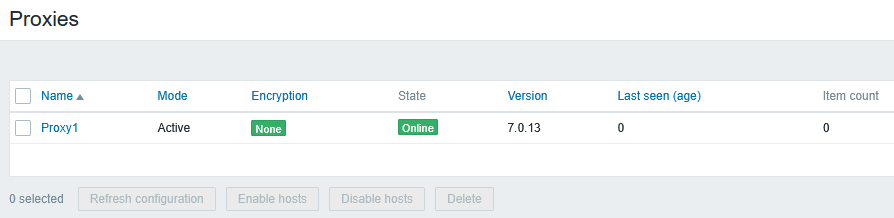

There we have it, the proxy received the configuration from the Zabbix server, and the proxy is also shown in Online state in the Zabbix UI:

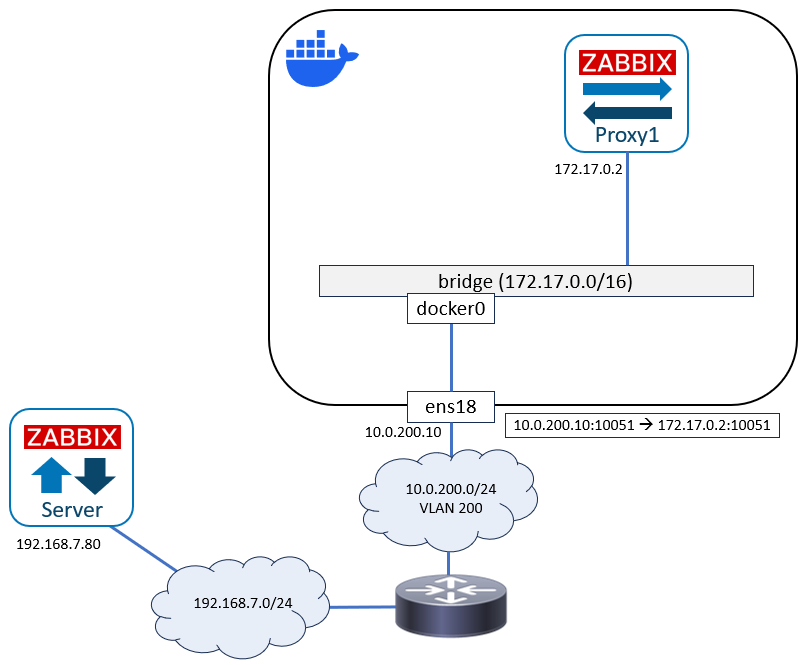

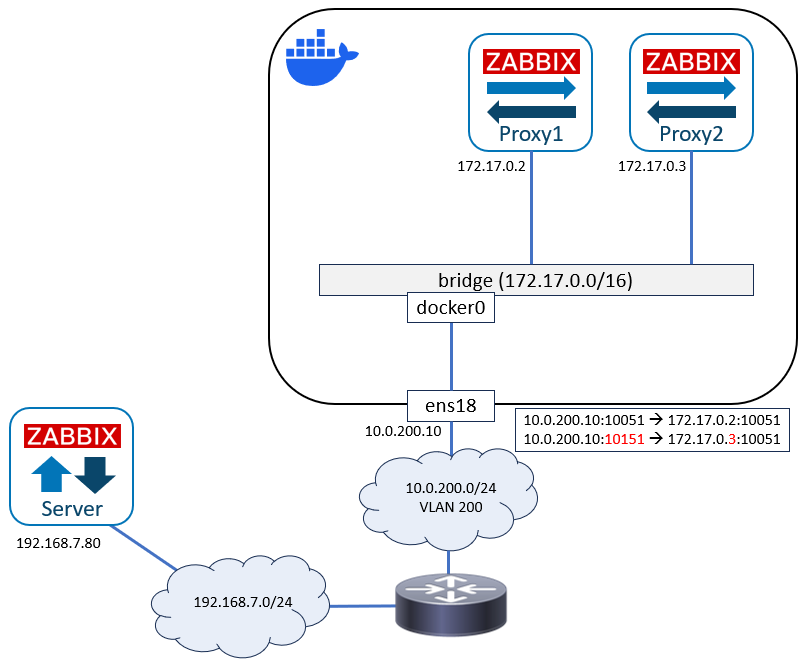

At this point, let’s see how the current network looks like:

When starting the container we didn’t specify any Docker network, so the container is using the default bridge network. Let’s see the container IP address:

markku@docker:~$ docker exec Proxy1 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eth0@if13: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP

link/ether c6:b5:68:d3:68:fb brd ff:ff:ff:ff:ff:ff

inet 172.17.0.2/16 brd 172.17.255.255 scope global eth0

valid_lft forever preferred_lft forever

markku@docker:~$

We can there see the Docker-assigned 172.17.0.2/16 IP address (from the 172.17.0.0/16 network in the bridge) in the eth0 interface in the container.

Let’s see quick packet captures on the host, first on the docker0 interface:

markku@docker:~$ sudo tcpdump -n -i docker0 -c 3 port 10051

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on docker0, link-type EN10MB (Ethernet), snapshot length 262144 bytes

16:48:09.110527 IP 172.17.0.2.42816 > 192.168.7.80.10051: Flags [S], ...

16:48:09.111542 IP 192.168.7.80.10051 > 172.17.0.2.42816: Flags [S.], ...

16:48:09.111564 IP 172.17.0.2.42816 > 192.168.7.80.10051: Flags [.], ...

3 packets captured

10 packets received by filter

0 packets dropped by kernel

markku@docker:~$

Then on the ens18 interface:

markku@docker:~$ sudo tcpdump -n -i ens18 -c 3 port 10051

tcpdump: verbose output suppressed, use -v[v]... for full protocol decode

listening on ens18, link-type EN10MB (Ethernet), snapshot length 262144 bytes

16:48:51.424076 IP 10.0.200.10.47026 > 192.168.7.80.10051: Flags [S], ...

16:48:51.425105 IP 192.168.7.80.10051 > 10.0.200.10.47026: Flags [S.], ...

16:48:51.425134 IP 10.0.200.10.47026 > 192.168.7.80.10051: Flags [.], ...

3 packets captured

10 packets received by filter

0 packets dropped by kernel

markku@docker:~$

There we can see that the Docker host (when using the default bridge) translates the source address 172.17.0.2 to the host’s own IP address 10.0.200.10, when the connection is going out of the host.

Case 2: Two Zabbix proxies, with the default bridge network

For whatever reason, let’s try starting another Zabbix proxy on the same host:

markku@docker:~$ docker run --name Proxy2 --detach -v proxy_databases:/var/lib/zabbix/db_data -v /etc/localtime:/etc/localtime:ro -e ZBX_HOSTNAME=Proxy2 -e ZBX_SERVER_HOST=192.168.7.80 --restart unless-stopped -p 10051:10051 zabbix/zabbix-proxy-sqlite3:alpine-7.0-latest

6fba63a5902e0bbf1fbda2f77aaafa4afcce55dd399f7641d9df43eb9cde3870

docker: Error response from daemon: failed to set up container networking: driver failed programming external connectivity on endpoint Proxy2 (e9f147fafb45c0e738db016f1ade02933fa58dd87fadeae08714c7040f822dfc): Bind for 0.0.0.0:10051 failed: port is already allocated

Run 'docker run --help' for more information

markku@docker:~$

That didn’t go well. The reason is obvious: The port 10051 on the host is already used for Proxy1 container, so it is not possible to use it for any other container after that.

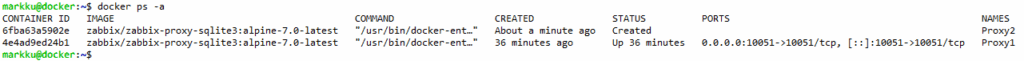

Based on the container ID that was returned, it still created the container for Proxy2 but was not able to start it. “docker ps -a” can be used to show all containers, running or not:

markku@docker:~$ docker ps -a

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6fba63a5902e zabbix/zabbix-proxy-sqlite3:alpine-7.0-latest "/usr/bin/docker-ent…" About a minute ago Created Proxy2

4e4ad9ed24b1 zabbix/zabbix-proxy-sqlite3:alpine-7.0-latest "/usr/bin/docker-ent…" 36 minutes ago Up 36 minutes 0.0.0.0:10051->10051/tcp, [::]:10051->10051/tcp Proxy1

markku@docker:~$

Wide text outputs won’t work well here, so here is the same as an image:

Let’s just remove the container and create it again with another forwarded port (10151):

markku@docker:~$ docker rm Proxy2

Proxy2

markku@docker:~$ docker run --name Proxy2 --detach -v proxy_databases:/var/lib/zabbix/db_data -v /etc/localtime:/etc/localtime:ro -e ZBX_HOSTNAME=Proxy2 -e ZBX_SERVER_HOST=192.168.7.80 --restart unless-stopped -p 10151:10051 zabbix/zabbix-proxy-sqlite3:alpine-7.0-latest

cf5bb183e79a29ce798820d08be281f8dbd2d964585e471704f55ef672298522

markku@docker:~$

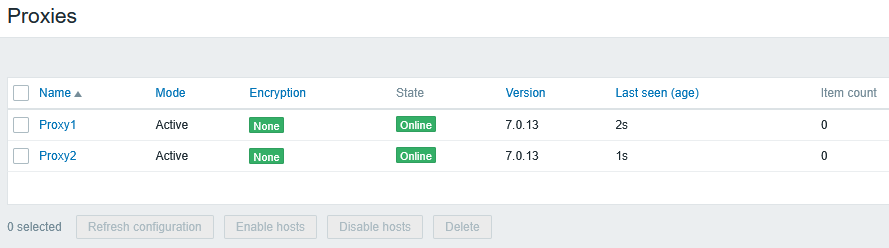

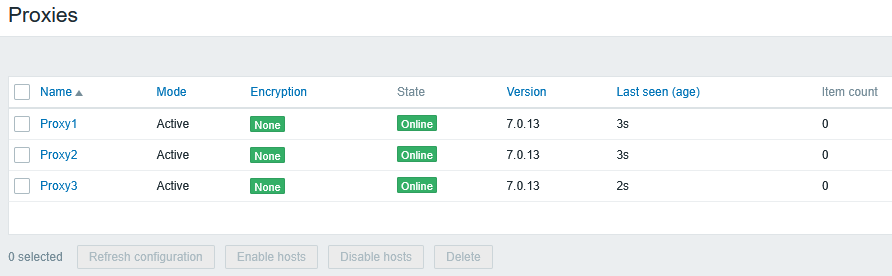

It started without errors, and after creating Proxy2 in the Zabbix UI as well, we can see both proxies Online:

On the Docker host, we can also see both ports 10051 and 10151 in the LISTEN state (in both IPv4 and IPv6):

markku@docker:~$ ss -ntl

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 4096 0.0.0.0:10151 0.0.0.0:*

LISTEN 0 4096 0.0.0.0:10051 0.0.0.0:*

LISTEN 0 128 [::]:22 [::]:*

LISTEN 0 4096 [::]:10151 [::]:*

LISTEN 0 4096 [::]:10051 [::]:*

markku@docker:~$

The obvious requirement now for any Zabbix agent in active mode to connect to Proxy2 is that they need to be explicitly configured to use port 10151 (instead of the default 10051) with the Docker host’s IP address 10.0.200.10. If the port is not changed, the connections will go to Proxy1 container instead.

This is the network right now:

Again, this works, but is not optimal because of the non-standard TCP port for Proxy2.

Let’s see some additional networking options.

Case 3: Zabbix proxy with a macvlan network

To connect the container directly to the network without a Docker bridge and address translation, we have a couple of options. The first one here is using macvlan network driver.

I’ll first add a new network interface to the Docker host. In my Proxmox Virtual Environment (PVE) cluster it receives name ens19:

markku@docker:~$ ip addr show dev ens19

7: ens19: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether bc:24:11:00:02:01 brd ff:ff:ff:ff:ff:ff

altname enp0s19

inet6 fe80::be24:11ff:fe00:201/64 scope link

valid_lft forever preferred_lft forever

markku@docker:~$

Then I’ll create a new network in Docker:

markku@docker:~$ docker network create -d macvlan --subnet 10.0.201.0/24 --gateway 10.0.201.1 --ip-range 10.0.201.32/27 -o parent=ens19 macvlan201 1e3d69e6a258e1bc68bfc1d99d477d1aabfbec5b4fdd3828537035496143f4bd

markku@docker:~$ docker network ls

NETWORK ID NAME DRIVER SCOPE

714b8349c1fc bridge bridge local

83b1f60b4423 host host local

1e3d69e6a258 macvlan201 macvlan local

4a3a4b50fa94 none null local

markku@docker:~$

The ens19 interface is connected to VLAN 201 in my setup, and I have defined the subnet, gateway and IP ranges according to it.

Let’s start yet another Zabbix proxy:

markku@docker:~$ docker run --name Proxy3 --detach -v proxy_databases:/var/lib/zabbix/db_data -v /etc/localtime:/etc/localtime:ro -e ZBX_HOSTNAME=Proxy3 -e ZBX_SERVER_HOST=192.168.7.80 --restart unless-stopped --network macvlan201 zabbix/zabbix-proxy-sqlite3:alpine-7.0-latest

b32c471e2b7c1a59bef7bddd57e9ae25ef12d6756bacca1767283e24d6115ebb

markku@docker:~$ docker exec Proxy3 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

22: eth0@if7: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UP

link/ether 0e:f7:fc:ee:a6:30 brd ff:ff:ff:ff:ff:ff

inet 10.0.201.32/24 brd 10.0.201.255 scope global eth0

valid_lft forever preferred_lft forever

markku@docker:~$

Note how we specified the network with the –network macvlan201 option, and now we see the container running with IP address 10.0.201.32, picked by Docker from the IP range specified when created the network.

After adding Proxy3 in Zabbix UI it also reached Online state:

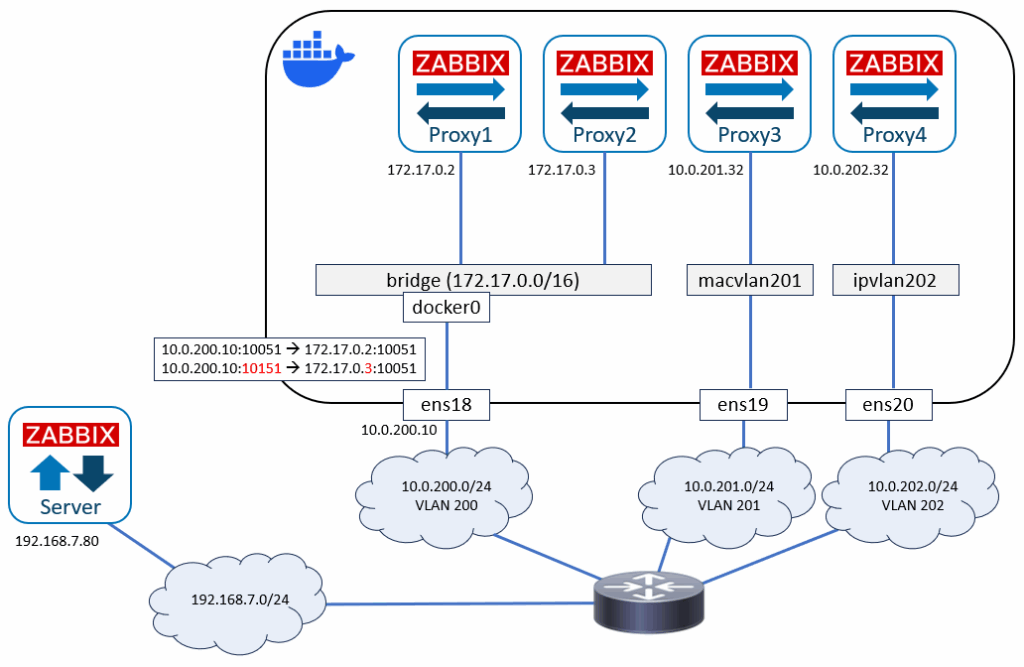

Our network now looks like this:

Notable thing now is that the IP stack on the Docker host is not participating on the IP routing of Proxy3 at all. There is no IP interface on the host for ens19 or macvlan201.

On the external router the IP neighbor table (ARP table) shows these:

admin@router:~$ ip neighbor

192.168.7.80 dev ens18 lladdr bc:24:11:8a:f5:4f REACHABLE

10.0.200.10 dev ens19 lladdr bc:24:11:d0:cc:ee REACHABLE

10.0.201.32 dev ens20 lladdr 0e:f7:fc:ee:a6:30 REACHABLE

admin@router:~$

The first entry is the Zabbix server, nothing interesting there. The second entry is the Docker host, ie. the IP address that is used for communication with Proxy1 and Proxy2 (which are in the 172.17.0.0/16 bridge networks with address translation).

The third entry is Proxy3, and its MAC address 0e:f7:fc:ee:a6:30 corresponds to the MAC address that is shown in the IP interface configuration inside the Proxy3 container above. The MAC address has been randomly assigned by the Docker host, but you can also assign it manually with a “–mac-address aa:bb:cc:dd:ee” option when starting the container.

Here we can see that the Proxy3 container is directly accessible in the physical (or virtual, as in this case) network outside the Docker host, just by normally using the assigned IP address 10.0.201.32, with no address translations or port mappings required.

It is also possible to add more containers to the same macvlan201 network if needed, and they will all get their own directly accessible IP addresses from the configured IP range.

But let’s see one more way to connect a Zabbix proxy container to the network.

Case 4: Zabbix proxy with an IPvlan network

Another way is using the IPvlan network driver in Docker. In this example I’ll be using the default L2 mode of the driver.

Again, I’ll first add a new interface to the Docker host for using with the IPvlan network. It is presented as interface ens20:

markku@docker:~$ ip addr show dev ens20

8: ens20: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether bc:24:11:00:02:02 brd ff:ff:ff:ff:ff:ff

altname enp0s20

inet6 fe80::be24:11ff:fe00:202/64 scope link

valid_lft forever preferred_lft forever

markku@docker:~$

Again, no IP configurations are needed for that interface on the Docker host. Let’s create the IPvlan network in Docker:

markku@docker:~$ docker network create -d ipvlan --subnet 10.0.202.0/24 --gateway 10.0.202.1 --ip-range 10.0.202.32/27 -o parent=ens20 ipvlan202

32f9c479df7907f43ed80c9827c8281dee5498acd246190823a638247728d98f

markku@docker:~$ docker network ls

NETWORK ID NAME DRIVER SCOPE

714b8349c1fc bridge bridge local

83b1f60b4423 host host local

32f9c479df79 ipvlan202 ipvlan local

1e3d69e6a258 macvlan201 macvlan local

4a3a4b50fa94 none null local

markku@docker:~$

Start Proxy4 container with ipvlan202 network:

markku@docker:~$ docker run --name Proxy4 --detach -v proxy_databases:/var/lib/zabbix/db_data -v /etc/localtime:/etc/localtime:ro -e ZBX_HOSTNAME=Proxy4 -e ZBX_SERVER_HOST=192.168.7.80 --restart unless-stopped --network ipvlan202 zabbix/zabbix-proxy-sqlite3:alpine-7.0-latest

9657b37d2a31eeda0476383a8f5fff47066420d3cd1bccd145d78f02ce1b29a7

markku@docker:~$ docker exec Proxy4 ip addr

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

24: eth0@if8: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue state UNKNOWN

link/ether bc:24:11:00:02:02 brd ff:ff:ff:ff:ff:ff

inet 10.0.202.32/24 brd 10.0.202.255 scope global eth0

valid_lft forever preferred_lft forever

markku@docker:~$

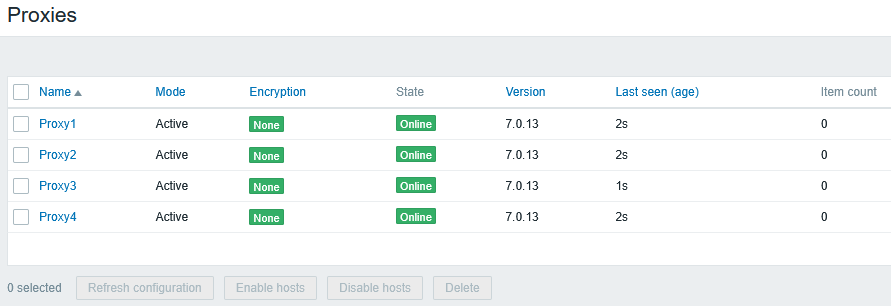

Adding Proxy4 in Zabbix UI makes the proxy connection work for it as well:

The final network:

Let’s see the IP neighbor table once more on the router:

admin@router:~$ ip neighbor

192.168.7.80 dev ens18 lladdr bc:24:11:8a:f5:4f REACHABLE

10.0.200.10 dev ens19 lladdr bc:24:11:d0:cc:ee REACHABLE

10.0.201.32 dev ens20 lladdr 0e:f7:fc:ee:a6:30 REACHABLE

10.0.202.32 dev ens21 lladdr bc:24:11:00:02:02 REACHABLE

admin@router:~$

As expected, we see one additional host in the router-connected network, but let’s see the MAC address of it more closely: bc:24:11:00:02:02

It is not a randomly generated MAC address but the exactly same MAC address that the Docker host has on the ens20 interface above.

That’s the major difference between macvlan and IPvlan networks:

- In macvlan network all containers have unique MAC addresses

- In IPvlan network all containers have the same MAC address of the Docker host interface.

In practice it means that with IPvlan the Docker host uses the IP address of the packet to see which container the packet belongs to, while with macvlan the MAC address is used.

For the Docker-external hosts it doesn’t matter much at all, they’ll communicate with whatever MAC address they see.

The IPvlan network can also be configured in L3 mode. It basically means having a dedicated IP subnet on the Docker host for the containers. Using the IPvlan L3 mode will require either static routing on the adjacent devices, or running a dynamic routing protocol like BGP between the Docker host and the router next to it. I think that goes beyond the expected setups for Zabbix proxy container deployments, so I’ll ignore it at this time.

Final notes

In my examples I used separate interfaces (ens18, ens19 and ens20) on the Docker host for the different networks. It is also possible to use VLAN-based subinterfaces, like ens18.200, ens18.201 and ens18.202, if the connection to the Docker host is configured as a VLAN trunk (which is typically the case with physical Docker hosts).

The Docker bridge network uses iptables rules to configure various restrictions and address translations between the container networks and the Docker-external network. In macvlan and IPvlan networks it is not possible to use iptables on the Docker host for restricting the container traffic. In those cases, if IP-based rules are needed on the server setup, they must be implemented inside the containers, which may require additional considerations when creating the container images. I don’t think the Zabbix-provided images contain the required tooling for that.