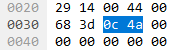

Let’s talk about byte ordering. You look at your packet bytes in Wireshark and see this data for a 16-bit integer field:

If the data is said to be big-endian, then it is 0x0c4a = 3146 in decimal.

If the data is said to be little-endian, then it is 0x4a0c = 18956 in decimal.

So, little-endian means that the least-significant bytes (“the little ones”) come first, and big-endian means that the most-significant bytes (“the big ones”) come first = forget the “endianness”, just look at “little/big” and that’s with what the data starts.

Makes sense, right?

“Network byte order” is big-endian. That’s by convention to ensure different systems can communicate correctly. “Host byte order” can be little-endian or big-endian, depending on the system. If the host byte order is not the same as network byte order (big-endian), the system needs to transform the data when using it in networking.

The existence of term “network byte order” should by itself be a very clear guidance to application developers about how the data should be presented in the networking protocols. In reality I have seen examples of traffic like DHCP packets where the host is populating two-byte fields incorrectly as little-endian, causing confusion or straight up incorrect behavior in the receiving party.

See also: Endianness (Wikipedia)